Anyone can automate end-to-end tests!

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.

In software testing, "testing tables" refer to structured methods for validating complex data interactions and decision-making processes. With software complexity increasing, testing tables help QA engineers verify whether systems handle data efficiently, particularly when dealing with conditional logic or large data sets. In this article, we’ll explore the importance of testing tables, common types, challenges, best practices, and automation tools.

Testing tables, especially decision tables, are crucial in software testing because they organize the many possible inputs and their expected results. They're especially useful for handling complex business rules and various conditions that affect software applications.

Decision table testing is a black-box testing approach that systematically records various input scenarios and their anticipated results in a tabular structure. It excels in systems where different inputs result in diverse outputs.

This feature helps show how inputs (causes) lead to outputs (effects). It covers all possible inputs, identifying errors and missing features. Thus, it makes complex rules easy for testers and developers to understand.

This approach improves test case creation by using orthogonal arrays. These arrays help testers cover many input scenarios with fewer test cases.

By eliminating redundancy, it concentrates on the most critical combinations, thereby conserving time and resources throughout the testing process.

guarantees that all important interactions between inputs are thoroughly tested without burdening the tester with an excessive number of cases.

Extended decision tables improve regular decision tables by including more details such as priorities, likely results, or specific test data.

Provides more information for each test case, simplifying understanding and execution.

Links conditions to their expected results, aiding in tracking requirements during testing.

Cause-effect graphs, though not always in table form, can be shown in tables to show how different causes lead to specific effects.

Makes it easier to understand complicated connections between inputs and their results.

Great for dealing with detailed business rules that require thorough checking.

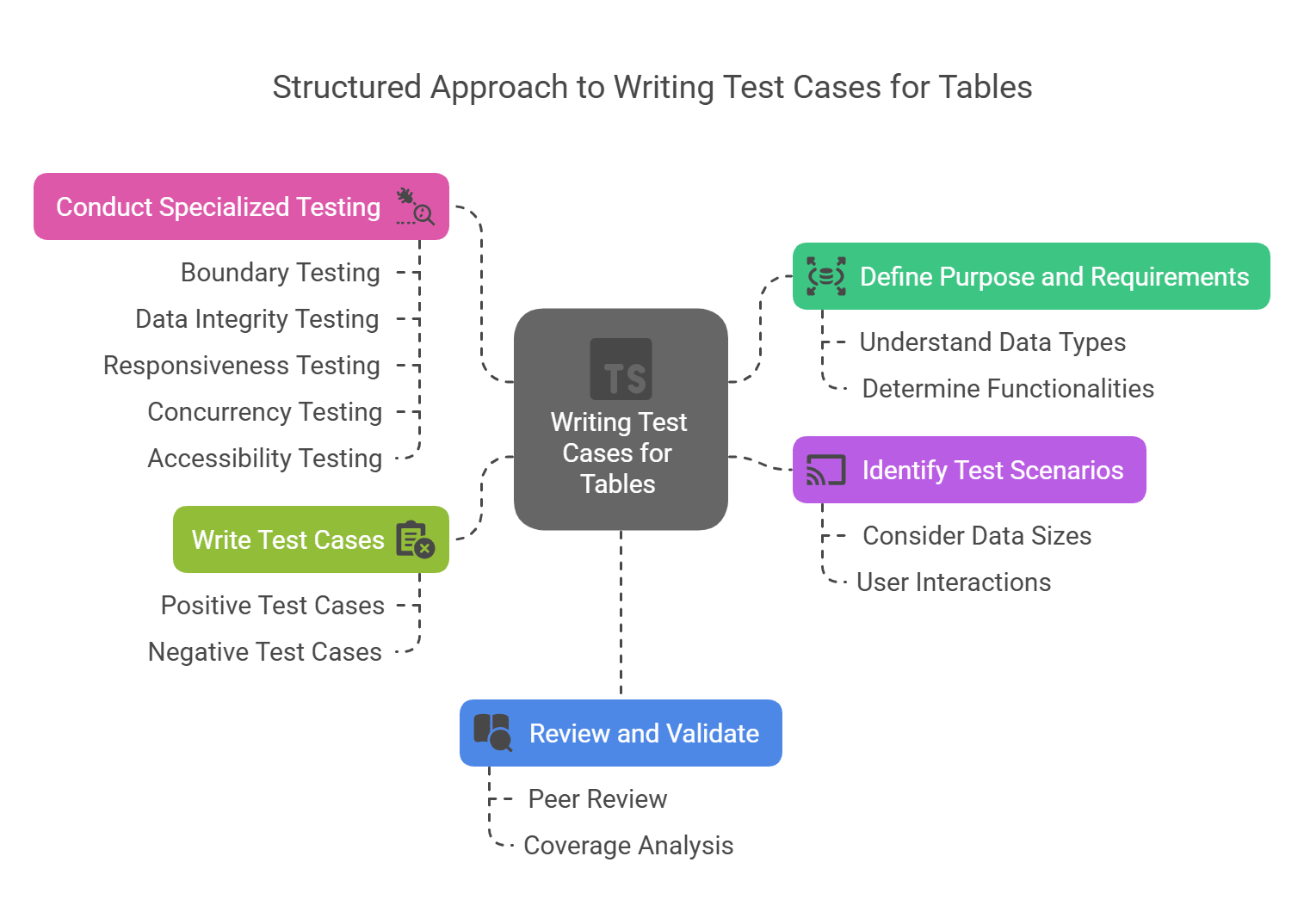

Test cases for tables play a crucial role in ensuring the accuracy, functionality, and user-friendliness of data presentation within digital applications. Properly constructed test cases can identify potential issues and weaknesses in table performance, ultimately contributing to a seamless user experience. To effectively write test cases for tables, follow this structured approach:

Before writing test cases, thoroughly understand the table’s purpose, the type of data it will display, and its required functionalities. This foundational knowledge helps in creating relevant and meaningful test cases.

Determine the different scenarios the table is expected to handle. Consider data types, sizes, and formats, as well as user interactions with the table. Identifying these scenarios helps in covering all possible use cases.

Develop test cases that validate expected behaviors under normal conditions. These include:

Table loads with the correct number of rows and columns.

Sorting works correctly when clicking on column headers.

Filtering returns accurate results.

Pagination functions properly.

Data updates dynamically when modified.

Row selection operates as expected.

Negative test cases help uncover vulnerabilities by testing the table under extreme or incorrect conditions, such as:

Entering incorrect data types.

Exceeding size limitations.

Attempting unauthorized actions.

Handling empty datasets gracefully.

Managing slow or failed API responses effectively.

Have test leads or other engineers review the test cases to ensure accuracy, correctness, and broad coverage of possible scenarios.

Test the table’s limits by assessing its behavior under maximum and minimum data input conditions. This includes:

Handling large datasets efficiently.

Processing long text entries correctly.

Managing size constraints without breaking functionality.

Verify that the table displays accurate and consistent data. Ensure:

Data displayed matches the real data source.

There are no inconsistencies or inaccuracies in table records.

Real-time updates are reflected correctly.

Check how the table adapts to different screen sizes and orientations. Ensure:

Table layout remains intact on various devices.

Content remains readable and user-friendly.

Interaction elements (buttons, dropdowns) are accessible on mobile and tablets.

Simulate multiple users accessing and interacting with the table simultaneously. Evaluate:

Handling of concurrent data updates.

Prevention of data corruption or overwrites.

Performance under high user load.

Ensure the table is usable for individuals with disabilities by testing:

Keyboard navigation functionality.

Compatibility with screen readers.

Compliance with accessibility standards (WCAG, ARIA attributes).

Develop test cases to check how the table responds to unexpected inputs or system errors. Verify:

Clear and informative error messages are displayed.

Graceful handling of system failures.

Proper logging of errors for debugging.

Writing test cases for tables involves verifying various aspects like data integrity, UI rendering, sorting, filtering, pagination, responsiveness, and accessibility. Here’s a structured approach:

These test cases ensure the table functions correctly.

Verify the table loads correctly with default data.

Check if the table displays the correct number of rows and columns.

Verify column headers are displayed correctly.

Check if sorting works correctly when clicking on column headers.

Verify filtering/searching functionality returns the correct results.

Ensure pagination works correctly (if applicable).

Check if data updates dynamically when modified.

Verify correct data is displayed in each cell.

Test if row selection works correctly (if applicable).

Check if row and column resizing works properly.

Verify the table handles empty data gracefully.

These ensure the table looks and behaves correctly.

Verify the table UI renders correctly across different browsers.

Check alignment of rows and columns.

Ensure text is properly wrapped or truncated if content is long.

Verify table responsiveness on different screen sizes.

Check the hover, click, and focus states of rows.

Ensure proper spacing between rows and columns.

These test cases verify the table’s data manipulation features.

Check if sorting works in ascending and descending order.

Verify sorting logic for numeric, text, and date columns.

Ensure filtering works with partial and exact keyword matches.

Test case-insensitive filtering.

Verify filtering with multiple conditions (if supported).

Check if clearing the filter resets the table.

If the table has pagination, test the following:

Verify the number of rows per page matches the settings.

Ensure navigation between pages works properly.

Check if the ‘Next’ and ‘Previous’ buttons function correctly.

Verify correct data is displayed on each page.

Test dynamic changes in pagination when rows are added/deleted.

These ensure the table performs well under load.

Test the table’s load time with a large dataset.

Verify lazy loading or infinite scrolling works correctly.

Ensure filtering and sorting performance with thousands of records.

Check how the table handles slow network conditions.

Ensure the table meets accessibility standards.

Verify keyboard navigation (Tab, Enter, Arrow keys).

Ensure screen readers can read the table properly.

Check for proper contrast and color usage.

Verify ARIA attributes for screen readers.

These test cases check negative test cases, edge cases and error handling.

Verify how the table handles special characters.

Check for XSS vulnerabilities (injection of scripts into cells).

Test table behavior when a user inputs invalid data.

Check how the table handles slow or failed API responses.

Verify if the table breaks when extreme data is entered (e.g., very long text, negative numbers, etc.).

| Test Case ID | Test Scenario | Steps | Expected Result | Status |

|---|---|---|---|---|

| TC_01 | Verify table loads correctly | Open page with table | Table loads with correct data | Pass/Fail |

| TC_02 | Check column sorting | Click on column header | Data sorts correctly | Pass/Fail |

| TC_03 | Verify pagination | Click "Next" button | Table shows next page of data | Pass/Fail |

Test tables work really well, but they also have a few problems. One big one is how complicated it is to handle all the different ways things can happen, especially when there are many decisions to make.

Moreover, maintaining and updating test tables can be time-consuming, especially as software requirements evolve. This can potentially result in outdated or incomplete test scenarios.

The absence of standardized practices for specific industries can also complicate implementation, necessitating customization based on project needs.

Define clear objectives and align testing tables with specific software requirements.

Simplify test cases by focusing on high-priority scenarios and limiting test combinations, regularly update test tables, and automate repetitive tests to save time and minimize errors.

As software evolves, update testing tables to reflect new functionality and remove obsolete scenarios.

Using automation tools can streamline repetitive tests, saving time and minimizing human errors.

This tool helps manage decisions logically and automatically, making sure they follow business rules.

It's great for companies needing good decision management.

This tool combines decision tables with Excel, making it easy to use and control rules.

It is ideal for teams that prefer to work with spreadsheets for their decision logic.

Drools is a robust rules engine that makes it easy to create and manage complex decision logic.

It is the top choice for organizations that need advanced rule processing capabilities.

Although not specifically designed for decision table testing, Excel offers a versatile spreadsheet interface that is great for defining and testing decisions.

It is a popular choice for quick prototyping of decision tables.

Corticon provides a graphical interface for decision tables and tools for executing rules.

It is beneficial for teams that are interested in visual modeling tools.

Tools can automatically generate test cases based on the rules in the decision table. Some good AI testing agents such as BotGauge on the market use GenAI to generate test cases for end-to-end testing. Every different mix of inputs creates a unique test case, making it easier to design tests.

Once the test cases are generated, they can be transformed into automated test scripts utilizing frameworks such as:

Test cases can be transformed into automated test scripts using automation frameworks such as Selenium, TestNG, and JUnit.

Selenium is popular for automating web applications across various browsers, while TestNG offers detailed reporting capabilities.

You can make test plans and strategies that consider different options from a decision table, allowing you to run tests with various inputs. This allows you to be more flexible and reduce the number of times you repeat code in tests.

Including table testing within continuous integration/continuous deployment (CI/CD) pipelines ensures that tests are consistently executed with each build, providing swift feedback on the quality of the software.

Executing automated tests involves running test scripts on the application you're testing (often called the AUT). It starts with setting up the test environment and making sure everything is ready. Then, you execute the test scripts, gathering results as they run.

Finally, you review these results to understand how well the app performed and whether any issues popped up. It's as simple as that!

Testing tables play a crucial role in software testing, especially for applications that involve complex decision-making processes. By grasping the different types of table testing and adhering to best practices, Quality Assurance (QA) teams can efficiently manage these tables, ensuring thorough coverage. The integration of automation tools further boosts efficiency, allowing testers to reliably verify functionality across various scenarios.

DTT in testing stands for Decision Table Testing, a test design technique using tables to represent combinations of inputs and corresponding outputs.

Decision Table Technique is a method used in software testing to create test cases based on different input combinations and their expected results.

Test Case Table is a structured format to document multiple test cases, often including input conditions, actions, expected outcomes, and pass/fail status.

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.